The University AI Commercialization Gap

| August 15, 2025

Part 1 of 3: Why Academia’s AI Breakthroughs Fail to Reach Market

“Innovation doesn’t stop at discovery. It stops when we fail to translate.”

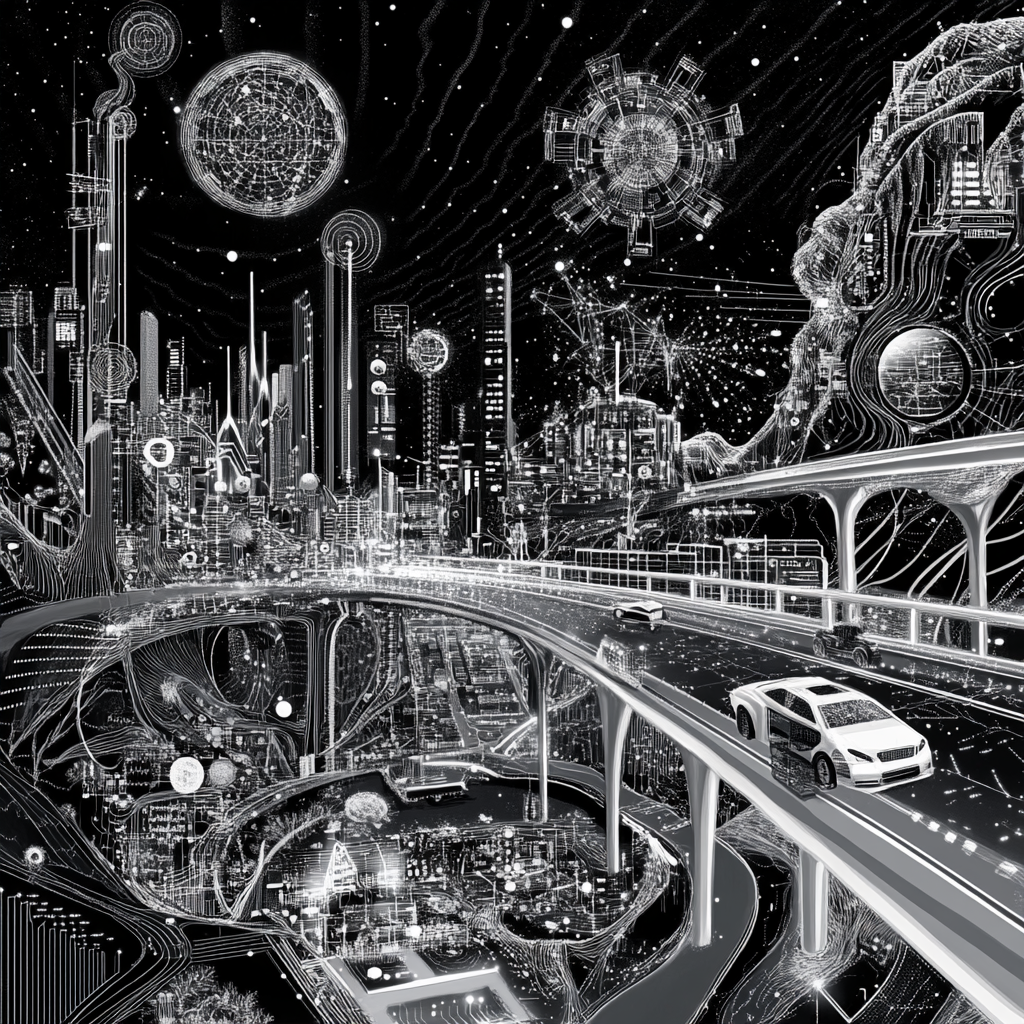

A university AI system that could save data centers $2 billion annually in energy costs may never leave the lab. Not because the technology doesn’t work—but because proving it works requires the very infrastructure it is designed to improve.

Here is the breakthrough: university engineers have developed algorithms that predict how novel thermal interface materials and phase-change cooling compounds will perform under different computational loads in real-time, potentially reducing data center energy consumption by 40% while extending server lifespan. The technology combines materials science with machine learning to optimize cooling performance as workloads shift—critical as AI applications drive unprecedented energy demands.

Data center operators are extremely interested, especially given that cooling accounts for 40% of their electricity costs. But here is the systematic challenge: to prove commercial viability, researchers need real-world validation data from actual data centers testing these new materials under varying conditions. To get access to those facilities and funding for materials testing, they need to demonstrate commercial viability first.

How do you break out of this loop?

While we navigate validation requirements, data centers continue consuming 1% of global electricity—a number climbing rapidly as AI applications proliferate, and we are missing opportunities to deploy breakthrough materials that could dramatically reduce that footprint.

This is not a story about inadequate engineering—it is about the structural barriers that systematically prevent breakthrough AI research from reaching the critical infrastructure that needs it most.

The Commercialization Reality

The vast majority of breakthrough AI research never leaves university labs. While universities publish thousands of AI papers annually, fewer than 5% of these technologies reach commercial deployment—a gap that represents billions in lost economic value and societal impact.

Drawing on my background in both Fortune 500 AI system deployment and university technology transfer, I have directly engaged with the complexities on each side of the commercialization process. In the corporate context, I led development and assessment of AI systems, focusing on practical implementation, integration challenges, and adherence to compliance standards. In university technology transfer, my focus shifts to evaluating new AI technologies for their technical merit, intellectual property strength, and genuine market opportunity.

The situation I observe is not confined to a single university; it exemplifies a broader national pattern. Many American universities are hindered by legacy systems in technology transfer and commercialization—namely, organizational structures, policy frameworks, and incentive models that were created for an earlier era of innovation. These legacy systems include rigid disclosure processes, sequential patent licensing pathways, siloed departmental approaches, and administrative priorities focused more on intellectual property metrics than on generating real-world impact. This institutional rigidity closely parallels the challenges organizations face with outdated IT infrastructure, where historical systems limit agility and slow the adoption of new technologies. As the pace of technological advancement accelerates—especially in fields such as artificial intelligence—legacy university frameworks increasingly struggle to keep up, resulting in many research breakthroughs remaining underutilized or unable to reach practical deployment.

This widening commercialization gap is not solely the product of administrative rigidity or outdated processes. At its core, it is driven by a fundamental misconception about the nature of artificial intelligence and how it should be managed in the context of technology transfer.

The Root Cause: AI Is Not Software

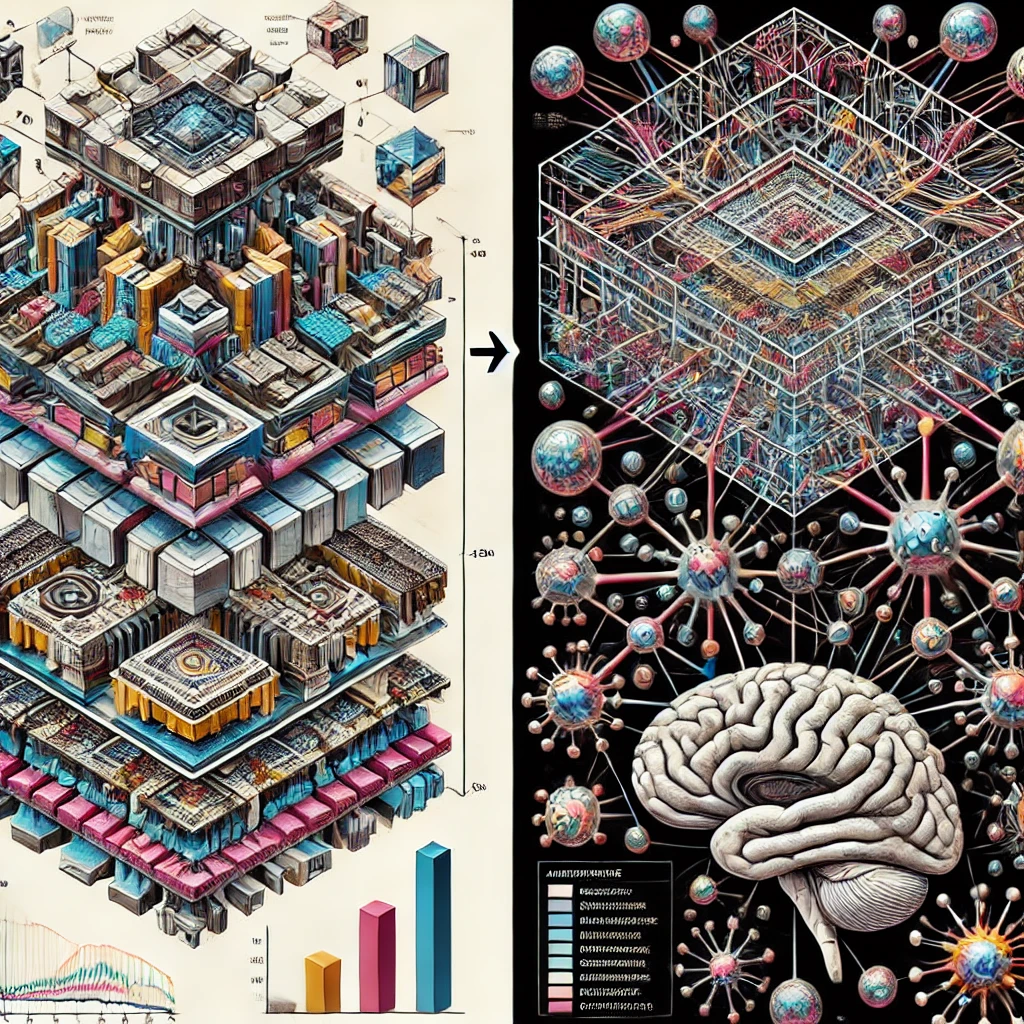

The real barrier is not a shortage of innovation, but a category error: universities handle artificial intelligence using frameworks designed for traditional software. AI systems learn, adapt, and evolve—yet they are evaluated, protected, and licensed as if they were static code packages.

This conceptual oversight has measurable consequences. The fundamental difference between artificial intelligence and traditional software extends beyond licensing models—it is rooted in how these technologies actually develop their capabilities and operate in real-world environments.

AI Systems Are Emergent, Not Programmed

Traditional software is explicitly programmed—every function, every decision tree, every output is predetermined by human developers. AI systems, by contrast, develop their capabilities through learning processes that even their creators cannot fully predict or control. As MIT’s Tommi Jaakkola notes, “We’re not programming these systems; we’re training them, and the difference is profound.”

This distinction brings to light four critical differences that demand new approaches to licensing and commercialization:

- Emergent Behavior vs. Predetermined Logic

Traditional Software: Every possible output is explicitly coded. If input A occurs, output B is guaranteed.

AI Systems: Capabilities emerge from training data. The same input can produce different outputs based on learned patterns, context, and probabilistic decision-making. A medical AI might diagnose the same symptoms differently based on population data it has learned from.

2. Data as the Core Asset, Not Code

Traditional Software: The primary value is embedded in the algorithms and lines of code.

AI Systems: The value resides in the trained model parameters and, crucially, in the data used to develop those parameters. As Google’s Jeff Dean observes, “The model is the product of the data and the learning process, not the code that created it.” Identical code may produce divergent capabilities if trained on different data sets.

3. Continuous Learning vs. Static Deployment

Traditional Software: Once deployed, the software remains unchanged until actively updated by developers.

AI Systems: These systems are subject to “model drift,” where performance degrades as real-world data patterns shift. Maintaining effectiveness requires continuous monitoring, retraining, and adaptation to new data and evolving conditions.

4. Probabilistic vs. Deterministic Outputs

Traditional Software: The same input always produces the same output—a fully predictable process.

AI Systems: Outputs are inherently probabilistic, with results expressed as confidence scores or likelihood estimates. For a given input, the system may produce a range of outputs, influenced by both uncertainty in the data and the model’s internal representation.

Real-World Examples That Prove the Distinction

Medical Diagnosis AI: A traditional medical software package might implement decision trees: “If symptom A and test result B, then suggest diagnosis C.” An AI diagnostic system learns patterns from millions of patient records and might suggest diagnosis C with 87% confidence, diagnosis D with 12% confidence, and flag unusual patterns it has never seen before. The AI’s value isn’t in its code—it’s in its learned understanding of medical patterns.

Agricultural Optimization: Traditional farm management software calculates irrigation schedules based on programmed formulas. An AI agricultural system learns from weather patterns, soil conditions, crop genetics, and historical yield data to make recommendations that improve over time. The same AI system trained on Iowa corn data will perform differently than one trained on California vineyard data, even with identical code.

Why This Matters for Technology Transfer

Treating AI as conventional software leads to a fundamental misalignment between technology transfer models and the technical realities of AI systems. Unlike static software products, AI is a learning system whose commercial success depends on factors that traditional licensing frameworks rarely address. Specifically, successful AI commercialization requires: continuous access to relevant data for model maintenance and improvement; ongoing retraining to adapt to shifting data and deployment conditions; customization for each unique application and environment; robust monitoring to detect and correct model drift over time; and compliance management as regulatory standards evolve.

As Yann LeCun of Meta AI explains, “AI systems are not software in the traditional sense—they’re learned representations of patterns in data.” Stanford’s Fei-Fei Li adds, “The intelligence in AI comes from the data and the learning process, not from hand-coded rules.” Ignoring these requirements explains why traditional university approaches to software commercialization often struggle with AI—and why so many promising academic innovations stall before reaching the market.

This fundamental misalignment between AI’s unique requirements and traditional software-based commercialization models explains why many university-led AI projects struggle to achieve real-world impact. Recognizing these differences is only the first step; the commercialization journey presents a distinct set of challenges that set AI apart from other fields such as biotechnology or hardware.

This isn’t a subtle technical difference—it’s a fundamental paradigm shift that invalidates traditional software licensing approaches.

The AI Commercialization Challenge

Unlike biotech’s linear lab-to-clinic pipeline or hardware’s prototype-to-production path, AI development depends on resources that live primarily in industry, not academia: massive compute clusters, real-world datasets, and deployment environments that can handle unpredictable conditions.

Consider the computational gap alone. Training a competitive large language model requires infrastructure costing millions of dollars—far beyond most university budgets. While initiatives like the NSF’s National AI Research Resource (NAIRR) pilot are beginning to address this, most academic labs remain constrained to proof-of-concept demonstrations on sanitized datasets.

This creates what I call the “demo-to-deployment chasm.” Academic AI tends to excel in controlled conditions but crumble under the complex realities of production environments: incomplete data, adversarial inputs, integration requirements, and the need for real-time decision justification.

From my experience evaluating AI technologies across multiple domains, this approach fundamentally misunderstands what makes AI commercially viable. AI technologies present unique challenges:

Data Dependency: Most AI innovations require large, high-quality datasets for training and validation. Universities often lack access to the proprietary datasets that make AI systems commercially viable, creating a fundamental barrier to demonstrating market readiness. I’ve observed promising algorithms that work beautifully with clean academic datasets but fail completely when exposed to the messy, incomplete data that characterizes real-world environments.

Integration Complexity: Unlike traditional software that can function independently, AI systems must integrate with existing enterprise infrastructure, data pipelines, and business processes. Academic prototypes rarely address these integration requirements, making it difficult for companies to evaluate commercial potential.

Scalability Requirements: AI systems that work in laboratory settings often fail to scale to production environments. Issues like computational efficiency, memory management, and real-time processing constraints become critical in commercial deployments but are rarely addressed in academic research.

The Cost of Misaligned Commercialization

When university AI research is forced into outdated commercialization frameworks, the predictable result is that promising innovations rarely translate into real-world impact. This misalignment leads to a series of cascading barriers:

No License, No Adoption: Ambiguity around intellectual property, reproducibility gaps, and unclear asset definitions frequently stall or halt licensing negotiations. Without a clear structure for what is being licensed—algorithm, model, data, or process—potential partners hesitate, and promising innovations never leave the lab.

Irreproducibility Undermines Trust: Industry partners need to independently verify research claims before commercial adoption. A lack of access to original data, sufficient computational resources, or comprehensive documentation means that many academic AI systems cannot be reliably reproduced or validated, eroding trust.

Lack of Explainability Blocks Deployment: Many high-performing academic models function as “black boxes.” In regulated industries, this opacity turns even a highly accurate model into an unusable liability. Without explainability and accountability, organizations cannot meet regulatory demands or internal risk standards.

Integration Barriers Raise Costs: Industry partners face significant costs and technical hurdles retrofitting research models for production, often prompting them to abandon university-developed solutions.

Unclear Intellectual Property Slows Deals: It is often uncertain whether a license covers just the code, the trained model, the data pipeline, or the full system. This legal ambiguity introduces risk and can derail agreements at the last minute.

The aggregate impact of these failures is profound. Universities miss out on revenue opportunities while researchers struggle to see real-world impact, accelerating talent migration to industry. Industry loses a valuable innovation pipeline, turning instead to in-house development. Society is deprived of transformative solutions in critical sectors such as healthcare, agriculture, and climate science.

These costs are not theoretical; they reflect a recurring pattern rooted in the failure to recognize and act on AI’s unique technical and operational needs. Until commercialization practices are adapted—moving beyond the “software” mindset—the gap from lab to market will persist, squandering both educational investment and national competitive advantage.

Recognizing the high stakes of misaligned commercialization is only part of the challenge. To understand why these failures occur so predictably, it is essential to examine how traditional technology transfer processes evaluate and manage AI innovations. The shortcomings are often rooted not just in lack of resources or expertise, but in a fundamental misfit between established practices and the realities of modern AI.

America still leads in AI research quality—but that advantage erodes rapidly when breakthroughs cannot become deployable systems.

Why Traditional Tech Transfer Frameworks Struggle with AI

Most technology transfer offices have been highly effective when working with traditional technologies, following a well-established linear model: invention → patent → license → product. However, artificial intelligence presents challenges that this approach was not originally designed to address.

Unlike typical inventions—which are often discrete products or processes—AI innovations frequently involve training methodologies, model architectures, data pipelines, or integration frameworks. Their commercial value often derives less from the underlying algorithm and more from how these systems perform in varied, complex, and evolving environments.

Many tech transfer offices—including my own—are encountering these realities across the sector. When AI inventions are evaluated through frameworks built for conventional technologies, certain essential questions can be overlooked:

- What types and sources of data are necessary, and how can partners access them?

- How will this technology integrate with complex enterprise systems?

- What ongoing monitoring, retraining, and support will the system require?

- How will performance and compliance be maintained as data and regulations evolve?

These are not shortcomings of individual offices, but a reflection of how quickly the landscape is changing. Recognizing and adapting to AI’s unique attributes is a sector-wide necessity. By updating evaluation and commercialization criteria to reflect the layered, dynamic nature of modern AI systems, technology transfer offices can unlock far greater impact from academic innovation and help bridge the gap from proof-of-concept to real-world deployment.

The Industry Perspective: What Companies Actually Need

Having worked on both sides of the university–industry boundary, I have seen firsthand that there is often a significant gap between what companies require for successful AI adoption and what universities are typically prepared to offer.

Companies need proven performance on real data—not cleaned academic datasets. They need integration roadmaps that show clear paths for connecting AI systems to existing technology stacks. They need ongoing support and updates because AI systems require continuous monitoring and improvement. They need regulatory compliance assistance for deployment in regulated industries. And they need scalability demonstrations that prove systems can handle enterprise-level deployments.

Here is the disconnect I observed repeatedly in corporate settings: industry does not just want raw performance metrics. It needs systems that integrate seamlessly, comply with regulations, explain their decisions, and can be supported long-term. Academic spinoffs would routinely present benchmark-dominating models that were unexplainable, undocumented, or impossible to maintain. These innovations lost out to simpler, more explainable alternatives—not because they were scientifically inferior, but because they were operationally viable.

In industry, viability beats novelty every time.

Conclusion

The AI commercialization gap is not inevitable. It is the predictable result of applying industrial-age frameworks to information-age technologies. But recognizing the problem suggests the solution: we need AI-native commercialization models designed for AI’s unique properties.

Some universities have found ways to bridge this gap. Stanford’s approach offers instructive contrasts with their AI commercialization model that combines flexible licensing terms, embedded entrepreneurship programs, and technical bridge funding that extends research beyond publication. Companies like Palantir, which emerged from academic research, demonstrate that university AI can reach market—but these successes required fundamentally different approaches than traditional tech transfer methods.

The question is not whether university AI can be commercialized, but whether our current systems are equipped to do it systematically rather than by exception.

What’s Next: From Gap to Action

This post examined the AI commercialization gap—why it exists, how it manifests, and why traditional tech transfer models struggle to close it.

At the core of the problem is a misalignment: AI is often handled like generic software, evaluated with outdated tools, and pushed into IP frameworks that don’t fit. The result? Innovation gets delayed, undervalued, or lost altogether.

In the next post in this series, I’ll share frameworks for what a modern, AI-native commercialization model could look like—approaches that align with AI’s technical realities and market requirements.

Rather than forcing AI into traditional frameworks, we’ll explore how universities can rethink evaluation, support structures, and partnership models to bridge the gap between breakthrough research and deployable systems.

The stakes couldn’t be higher. While we debate frameworks, international competitors are building theirs. The commercialization models we develop today will determine which AI innovations transform industries—and which remain buried in academic archives.

Innovation doesn’t stop at discovery. It stops when we fail to translate.

Recent Blogs

View All

The AI Research Consortium Revolution

| November 11, 2025

The University AI Commercialization Gap

| August 15, 2025

Scaling Intelligence: Quantum Bits to Global Networks

| August 29, 2024

The Future of Shopping is Now

| July 25, 2024

Navigating the Future of AI and Society

| July 24, 2024