Navigating the Future of AI and Society

| July 24, 2024

Imagine a world where machines make life-altering decisions about your health, finances, and freedom. Now, ask yourself: who’s responsible when these decisions go wrong? This isn’t a scene from a sci-fi novel—it’s the reality we’re rapidly approaching in the age of artificial intelligence. As AI systems increasingly influence our lives, the concept of Responsible AI has emerged as a crucial framework for ensuring these powerful tools benefit society while minimizing risks.

The AI Dilemma: Innovation vs. Caution

In Silicon Valley, a fascinating debate is unfolding that captures the essence of the Responsible AI dilemma. On one side, we have tech luminaries like Elon Musk warning of AI as “our biggest existential threat.” His cautionary stance reflects a deep concern about the potential for AI to outpace human control, leading to unforeseen and possibly catastrophic consequences. On the other side, pioneers like Yann LeCun, Chief AI Scientist at Meta, argue that “the risk of slowing AI is much greater than the risk of disseminating it.” LeCun’s perspective emphasizes the transformative potential of AI to solve complex global challenges and drive unprecedented innovation.

This tension between innovation and caution forms the crux of today’s discourse on Responsible AI. It’s a balancing act that requires us to harness the immense potential of AI while simultaneously establishing robust safeguards to protect individuals and society. But what exactly does Responsible AI entail, and why should it matter to you?

Responsible AI: From Asimov to Algorithm

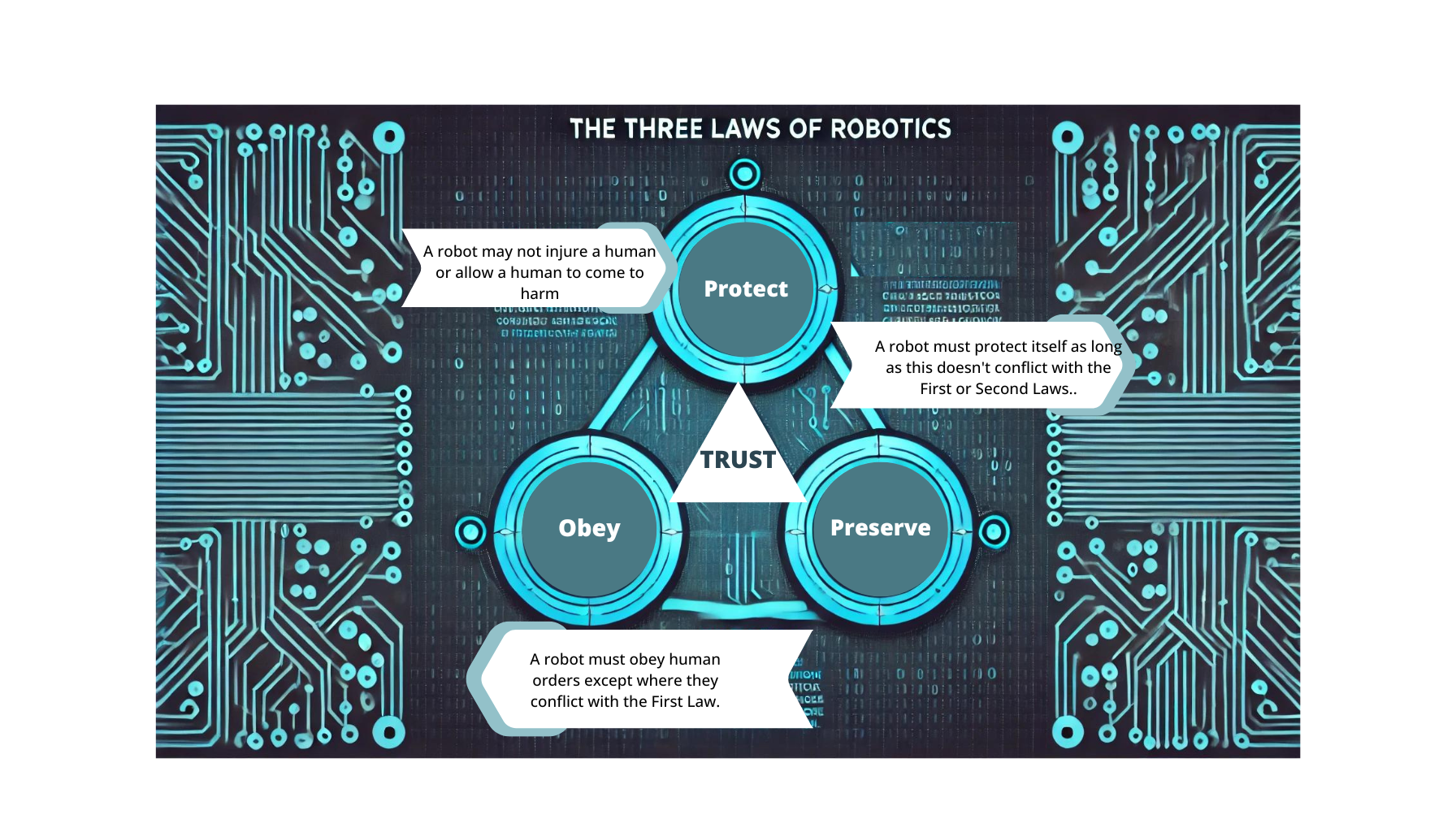

Responsible AI isn’t just a buzzword—it’s a set of principles guiding the development and deployment of AI systems to benefit society while minimizing risks.The roots of Responsible AI stretch back further than you might think. In 1942, science fiction author Isaac Asimov introduced the Three Laws of Robotics in his short story “Runaround.” These laws, designed to ensure robots (and by extension, artificial intelligences) would not harm humans, laid the groundwork for thinking about the ethical development of AI. What was once a literary device has now become a cornerstone of a multi-billion dollar industry and a focal point of global policy discussions.

Today, Responsible AI has evolved into a comprehensive framework encompassing principles such as transparency, fairness, privacy, accountability, safety, and human oversight. These principles aim to create AI systems that are not just powerful, but also trustworthy and beneficial for all users. For instance, the principle of transparency and explainability ensures that AI systems’ decision-making processes are comprehensible, allowing stakeholders to understand how they operate. This is crucial in sectors like finance or healthcare, where AI decisions can have significant impacts on individuals’ lives.These principles have evolved from literary concepts to cornerstones of a multi-billion dollar industry and global policy discussions.

The Real-World Impact of AI Ethics

The real-world impact of AI ethics became starkly apparent in 2016 when ProPublica revealed racial biases in the COMPAS algorithm used for criminal risk assessments. This wasn’t just a technical glitch—it had real-world consequences for individuals facing the justice system. The case underscored the critical importance of fairness and non-discrimination in AI systems, highlighting how unchecked algorithms can perpetuate and even exacerbate societal inequalities.

Navigating the AI Landscape: Industry and Government Responses

As AI continues to permeate our lives, tech giants and governments alike are grappling with how to implement Responsible AI principles. Industry leaders are taking diverse approaches. Elon Musk, consistent with his cautionary stance, advocates for strict oversight of AI development. Google’s CEO Sundar Pichai calls for a more balanced approach to regulation, acknowledging the need for guardrails while ensuring innovation isn’t stifled. Microsoft’s Satya Nadella champions the concept of “democratic AI,” focusing on inclusivity and the broad range of benefits that AI can offer to society.

Governments, too, are stepping up to establish frameworks for responsible AI practices. In the United States, President Biden’s Executive Order on Safe, Secure, and Trustworthy Artificial Intelligence represents a significant milestone in federal AI governance. This comprehensive order addresses critical issues such as AI-related privacy concerns, algorithmic discrimination, and workforce transformation, setting the stage for responsible development and deployment of AI technologies in the country.

On the global stage, the European Union has made significant strides with its proposed AI Act. This ambitious initiative aims to establish a risk-based regulatory framework for AI across the EU. By setting clear guidelines and standards, the AI Act seeks to foster public trust in AI technologies while promoting innovation and safeguarding the well-being of individuals and society as a whole.

The Path Forward: Your Role in Shaping AI’s Future

As AI continues to permeate our lives, the principles of Responsible AI will become increasingly crucial. But what does this mean for you?

- Stay Informed: Understanding the basics of AI and its ethical implications empowers you to make informed decisions about the technology you use.

- Demand Transparency: As a consumer, your voice matters. Demand explainable AI from the companies whose products you use.

- Engage in the Dialogue: Participate in public discussions about AI regulation. Your perspective is valuable in shaping policies that will affect us all.

- Support Ethical Innovation: Choose products and services from companies that prioritize Responsible AI principles.

The future of AI isn’t set in stone—it’s being shaped right now, with each decision we make as individuals and as a society. By embracing the principles of Responsible AI, we can harness the transformative power of this technology while safeguarding our values and well-being.

As we stand at this technological crossroads, remember: the most powerful algorithm is the one guided by human wisdom and ethics. Let’s ensure that as AI advances, it does so in a way that elevates humanity rather than diminishes it.

What steps will you take to promote Responsible AI in your personal and professional life? The future is waiting for your input.

Recent Blogs

View All

The AI Research Consortium Revolution

| November 11, 2025

The University AI Commercialization Gap

| August 15, 2025

Scaling Intelligence: Quantum Bits to Global Networks

| August 29, 2024

The Future of Shopping is Now

| July 25, 2024

Navigating the Future of AI and Society

| July 24, 2024

Let us this how this work